AI <> Crypto Projects That Aren't Complete Bullsh*t

Navigating the intersection of Crypto and Artificial Intelligence.

This is a guest post by 563defi, DeFi head at Blocmates. Make sure to follow him if you enjoy this report.

Introduction

Searching for fresh alpha on the timeline involves wading through some hot garbage. When the barrier-to-entry for a project to quickly raise 5-6 figures is only a semi-coherent bio and some decent branding, the grifters will adhere to every new narrative they can find. And with everyone in TradFi hopping on the AI bandwagon, the “Crypto-AI” narrative has dialed this problem up to 11.

The problem with the vast majority of these projects is that:

- Most crypto projects don’t need AI

- Most AI projects don’t need crypto

All DEXs don't need a built-in AI assistant and every chatbot doesn’t need an accompanying token to bootstrap its adoption curve. The shoehorning of AI into crypto (and crypto into AI, for that matter) drove me to the cliff of insanity when I did my initial deep dive into the narrative a while back.

The bad news? Continuing down our current path of further centralizing this tech ends in tears, and the metric shit ton of bogus “AI x Crypto” projects get in the way of turning the tide.

The good news? There’s a light at the end of the tunnel. Sometimes, AI does indeed benefit from crypto-economics. Likewise, there are some use cases of crypto where AI can solve some real problems.

In today’s piece, we’re going to venture into these key intersections. The little pockets of innovation where these niche ideas overlap to form a whole that is greater than the sum of its parts.

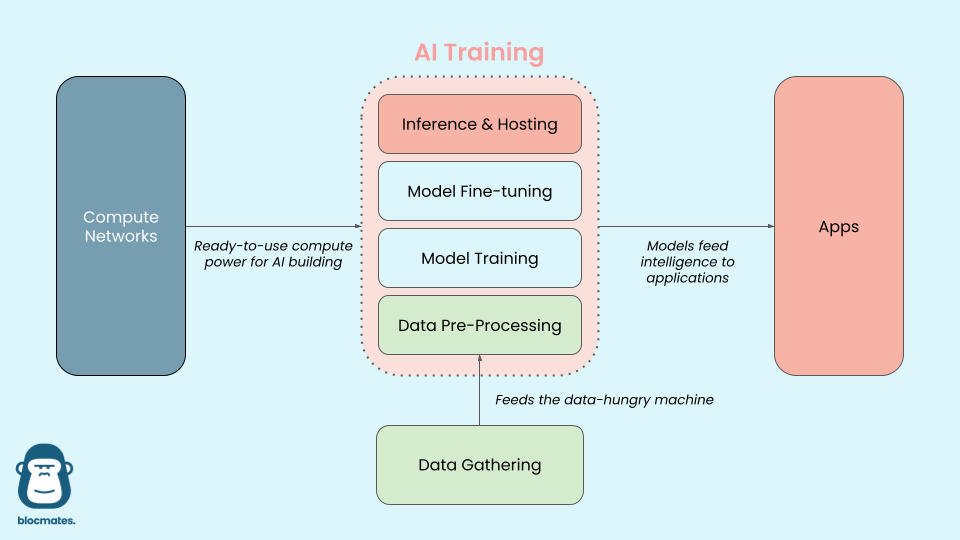

A high level view of the AI stack

Below is how I like to think about the different verticals involved in the “Crypto-AI” food chain (I also like Tommy’s, if you want to dig a little deeper). Note that this is an extremely simplified view, but hopefully it helps set the stage.

At a high level, here’s how it all works together:

- Data is gathered at a massive scale.

- This data is processed so that machines understand how to ingest and apply it.

- Models are trained on this data to create a general model.

- It can then be fine-tuned to handle specific use cases.

- Finally, these models are deployed and hosted so that apps can query them for useful implementations.

- All of this requires a massive amount of computational resources, which can be run locally or drawn from the cloud.

Let’s explore each of these sectors, with special attention to how different crypto-economic designs could be used to actually improve the standard workflow.

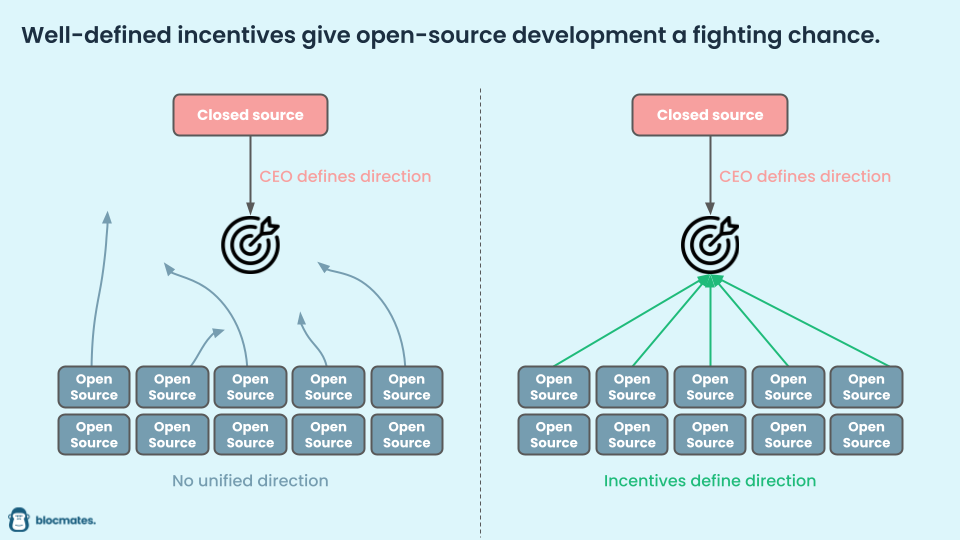

Crypto gives open source a fighting chance

The whole “closed-source” vs “open-source” development methodology goes back to the Windows-Linux debate and Eric Raymond’s famous Cathedral vs. Bazaar argument. And while Linux is widely used today amongst hobbyists, about 90% of users opt for Windows. Why? Incentives.

Open-source development, at least from the outside looking in, has a lot of benefits. It allows the maximum number of people to participate and contribute to the development process. But in this headless structure, you don’t have a unified directive. You don’t have a CEO who is motivated to get their product into the hands of as many people as possible to maximize their bottom line. In open-source development, you are at risk of the project evolving into a chimera, splitting off into tangents at every junction in design philosophy.

And what’s the best way to align incentives? Construct a system that rewards behaviors that further your goal. In other words, put money in the hands of the actors that get us closer to our objectives. With crypto, this can be hardcoded into law.

We’re going to take a look at some projects doing just that.

Decentralized Physical Infrastructure Networks (DePINs)

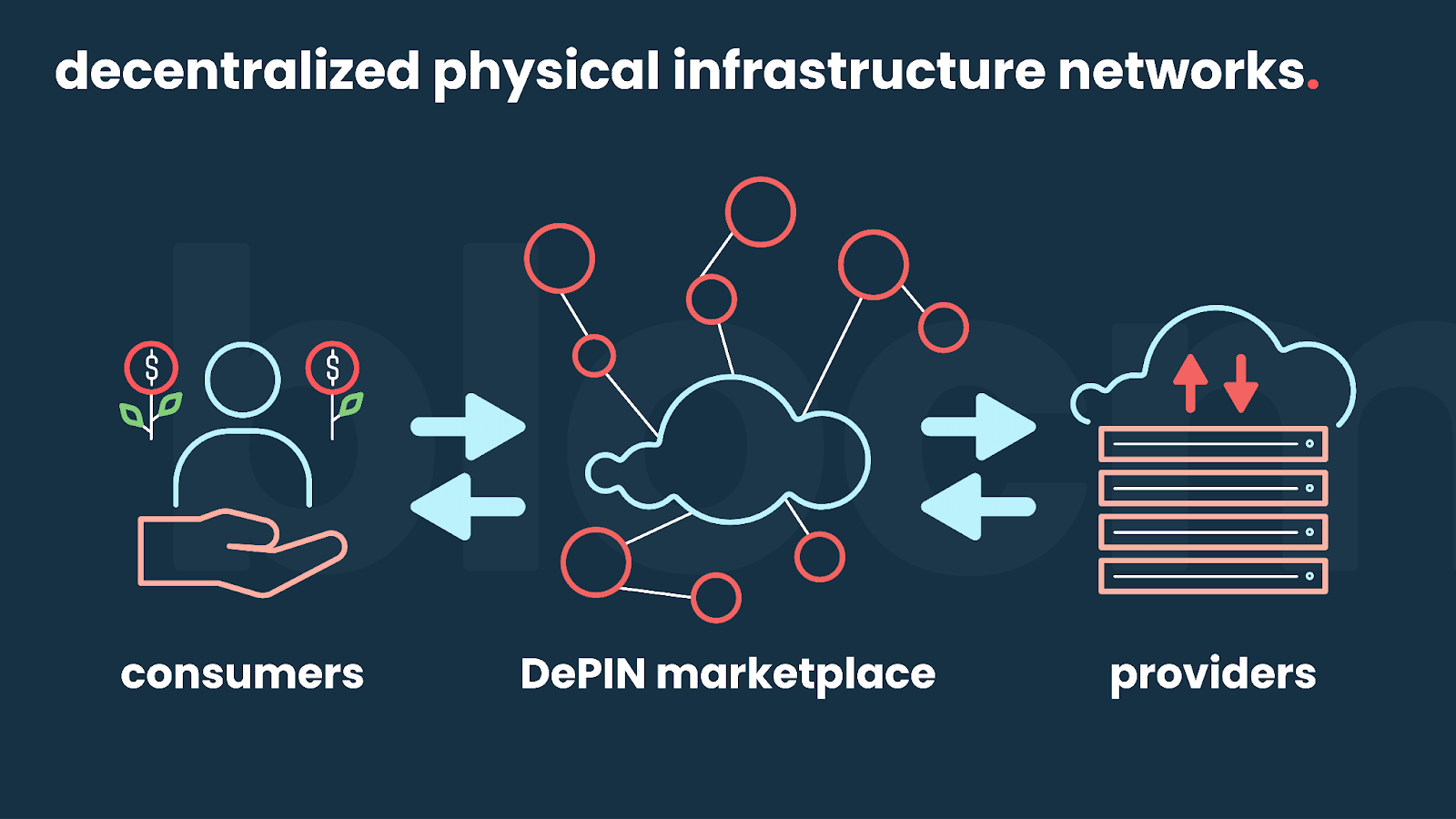

“Oh c’mon, this shit again?” Yeah, I know the DePIN narrative has been run into the ground almost as much as AI itself, but stick with me for a moment. I’ll die on the hill that contends that DePINs are one use case of crypto that actually has a chance at changing the world. Think about it.

What is crypto genuinely good at? Removing middlemen and incentivizing activity.

Bitcoin’s original vision of a peer-to-peer money endeavored to cut banks out of the equation. In a similar fashion, modern DePINs aim to push out centralizing forces and usher in provably-fair marketplace dynamics. And, as we will see, this architecture is ideal for crowdsourcing AI-adjacent networks.

DePINs use early token emissions to ramp up the supply-side (providers), hoping that this attracts sustainable demand from consumers. This is meant to solve the cold-start problem of new marketplaces.

This means that early hardware/software (“node”) providers earn a bunch of tokens and a little bit of cash. As the cash starts flowing in from users that leverage these nodes (ML builders, in our case), this begins to offset the decreasing emissions over time until a fully self-sustaining ecosystem is in place (which could take years). Early adopters such as Helium and Hivemapper showed just how effective this design could be.

Data networks, a look into Grass

GPT-3 was supposedly trained with 45TB of pure text data, which equates to about 90 million novels (and yet it still can’t draw a damn circle). With GPT-4 and -5 requiring more data than what literally exists on the surface web, calling AI data-hungry is the understatement of the decade.

If you are anyone outside of the top dogs (OpenAI, Microsoft, Google, Facebook), acquiring this data is exceedingly difficult. The common tactic for most is webscraping, which is all fine and dandy until you try to ramp up. Having a single Amazon Web Services (AWS) instance that is trying to scrape tons of sites will get you rate-limited in a hurry. This is where Grass comes in.

Grass connects more than two million devices, organizing them to scrape websites from users’ own IP addresses, collect it, structure it, and sell it to these AI companies in desperate need of data. For their trouble, users that contribute to the Grass Network can earn a steady stream of income, courtesy of the AI companies utilizing their data.

Of course, there’s no token yet, but a future $GRASS token could sweeten the deal for users to download their browser extension (or phone app). Not that they need it, having already run a masterful referral campaign that has onboarded more than other projects could only imagine.

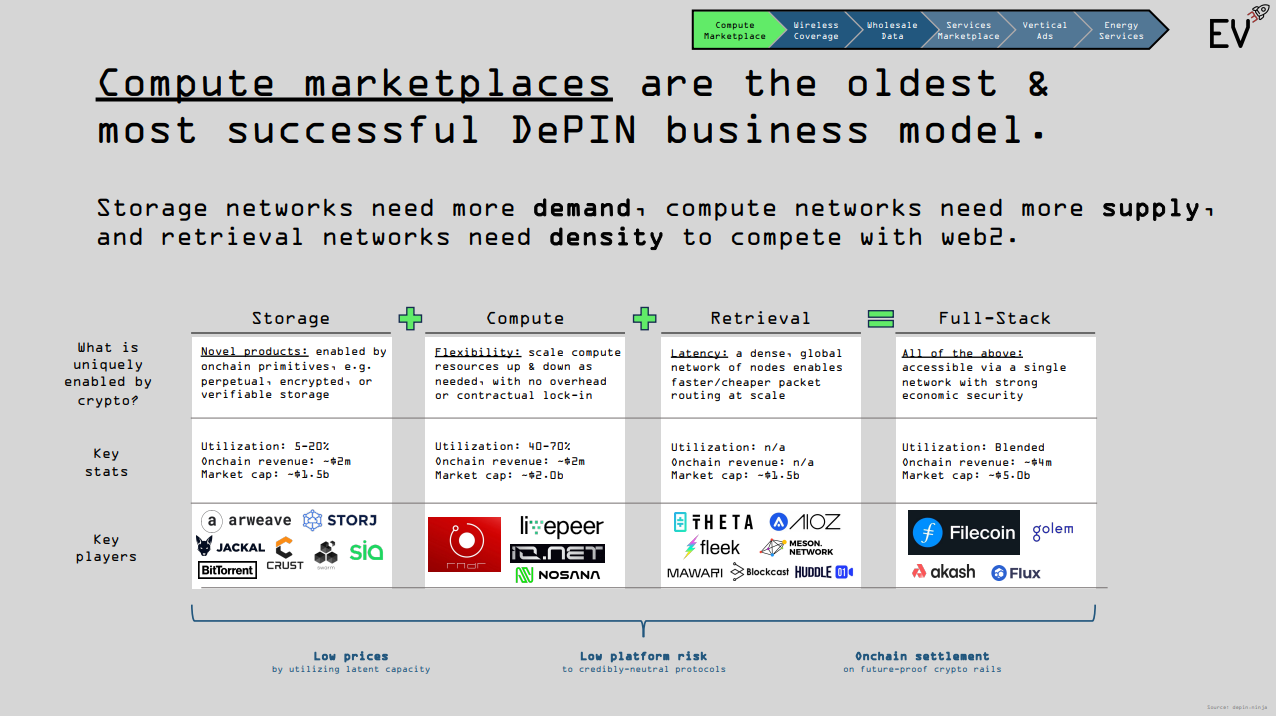

GPU networks, a look into io.net

Perhaps even more important than data is computational power. Did you know that China dropped more cash on GPUs than oil in 2020 and 2021. Absolutely insane, but this is only the beginning. Goodbye petro-dollar, make way for the compute-coin.

Now, there are a lot of GPU DePINs out there, and here is how they generally work. On one side, you have the Machine Learning engineers/companies that are desperate for compute. And on the other, you have the data centers, idle mining rigs, and hobbyists with inactive GPUs/CPUs. This global supply is massive - but uncoordinated. There’s no easy way to contact 10 different data centers with spare supply and have them bid for your usage.

A centralized solution would create a rent-seeking middleman whose incentive would be to extract maximum value from each party, but here’s how crypto can help.

Crypto is scarily good at creating marketplace layers, connecting disparate groups of buyers and sellers, in an efficient manner. A piece of code isn’t beholden to a shareholder’s financial interests.

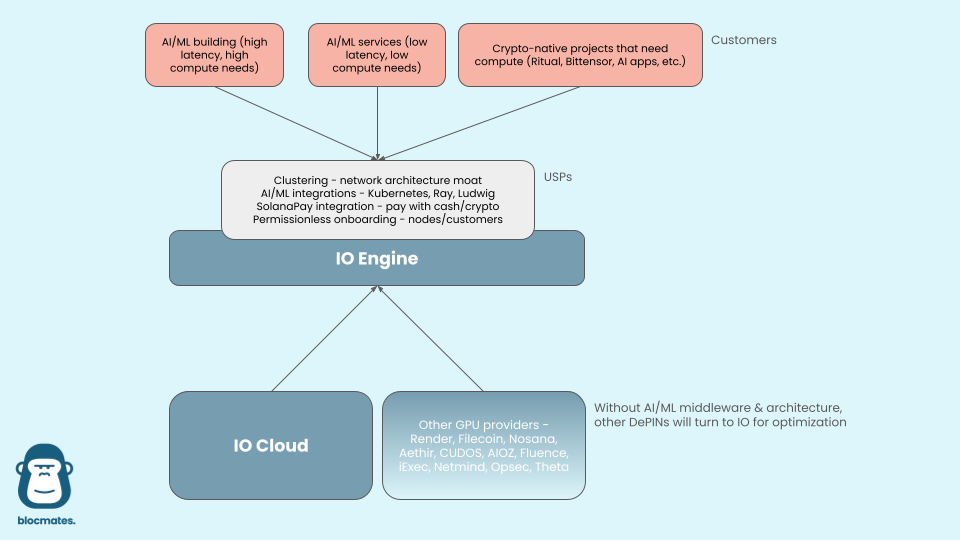

io.net stands out because it introduces some cool new tech that is integral for AI training - their clustering stack. Traditional clustering involves physically hooking up a bunch of GPUs in the same data center so that they can work together for model training. But what if your hardware is scattered across the globe? IO worked with Ray (used to create ChatGPT) to develop clustering middleware that can connect non-collocated GPUs. Pretty cool.

And while the AWS sign up process can take days, clusters can be spun up on io.net in 90 seconds, 100% permissionlessly. For these reasons, I could see io.net becoming the hub for all of the other GPU DePINs, where they can all plug into their “IO Engine” to unlock baked-in clustering and onboarding smoothness. Only possible with crypto.

You’ll notice that most decentralized AI projects with ambitious scope (e.g. Bittensor, Morpheus, Gensyn, Ritual, Sahara) all have explicit “compute” requirements - this is exactly where GPU DePINs ought to slot in. Decentralized AI needs permissionless compute.

Playing with incentive structures

Again, going back to Bitcoin for inspiration. Why do the miners incessantly bang out hashes at rapid speed? Because that’s what they are being paid to do - Satoshi proposed this architecture because it optimized for security above all else. The lesson? The built-in incentive structures of these protocols determine the end product they produce.

Bitcoin miners and Ethereum stakers are the players hoovering up all of their native tokens, because that’s what the protocol wants to incentivize - participants to be miners and stakers.

In an organization, this could come from the CEO, who defines the “vision” or “mission statement.” But humans are fallible and could veer the company off the rails. Computer code, on the other hand, can keep its focus better than even the most brown-nosed wage slave. Let’s take a look at a couple decentralized projects whose built-in tokenomics focus participants towards lofty goals.

AI-building networks, a look into Bittensor

So… incentives.

*Hits blunt* “Dude. What if we got Bitcoin miners to build AIs instead of just solving useless math problems?”

There you go, you made Bittensor. Well, kind of.

The goal of Bittensor is to create several experimental ecosystems for tinkering, with the objective of producing “commoditized intelligence” within each. This means that one ecosystem (known as a subnet, or “SN” for short) could be focused on developing a language model, another on a financial model, and more on text-to-speech, AI-detection, or image generation (see the currently active projects here).

To the Bittensor network, it doesn’t quite matter what you want to work on. As long as you can prove that your project is worthy of being funded, incentives will flow. This is the goal of the Subnet Owner, who registers the subnet and tweaks the rules of their game.

The players of this “game” are known as Miners. These are the ML/AI engineers & teams that build the models. They are locked in a Thunderdome of continuous examination, battling one another for the top spot in order to earn the most rewards.

Validators are the other side of the coin, in charge of doling out the examination and grading the Miners’ work accordingly. If a Validator is found to be colluding to prop up a Miner, it’s ousted.

Remember the incentives:

- Miners earn more when they outcompete other miners within their subnet - this pushes the AI development forward.

- Validators earn more when they accurately identify high- & low-performing miners - this keeps the subnet honest.

- Owners earn more when their subnet produces more useful AI models compared to other subnets - this pushes owners to optimize their “game.”

You can think of Bittensor as a perpetual rewards machine for AI development. Burgeoning ML engineers could go out and try building something, pitch the idea to VCs, and try to raise some money. Or they could hop on one of the Bittensor subnets as a miner, kick some ass, and hoover up a shitload of TAO. Which seems easier?

There are some top-notch teams building on the network:

- Nous Research are kings of open source. Their subnet flips the script when it comes to fine-tuning open-source LLMs. By testing the models against a constant stream of synthetic data, the leaderboard can’t be gamed (unlike in traditional benchmarks like HuggingFace’s).

- Taoshi’s Proprietary Training Network is basically an open-source quant trading firm. They task their ML contributors to build trading algorithms to predict asset price movements. Their APIs give quant-level trading signals to retail and institutional users alike and they’re on the fast track to major profitability.

- Cortex.t by the Corcel team serves a dual purpose. First, they incentivize miners to contribute API access to top-of-the-line models such as GPT-4 and Claude-3, ensuring continuous availability to builders. They also offer synthetic data generation, which is extremely useful for model training as well as benchmarking (which is why it’s used by Nous). Check out their tools here - chat and search.

If nothing else, Bittensor reiterates the power of incentive structures. All made possible by crypto-economics.

Smart agents, a look into Morpheus

Now, we get to look at both sides of the coin with Morpheus, where:

- Crypto-economic structures are building AI (Crypto helps AI) &

- AI-enabled applications enable new use cases in crypto (AI helps Crypto)

“Smart agents” are just AI-powered models that are trained on smart contracts. They know the in’s and out’s of all the top DeFi protocols, where to find yield, where to bridge, and how to spot sketchy contracts. They are the “auto-routers” of the future and, in my opinion, they will be how everyone interacts with the blockchain in 5-10 years. In fact, once we get to that point, you might not even know you’re using crypto at all. You’ll just be telling a chatbot that you want to move some savings into another type of investment and it’ll all happen in the background.

Morpheus embodies the “incentivize it and they will come” message of this section. Their goal is to have a platform where smart agents can propagate and flourish, each building off the success of the last in an ecosystem of minimized externalities.

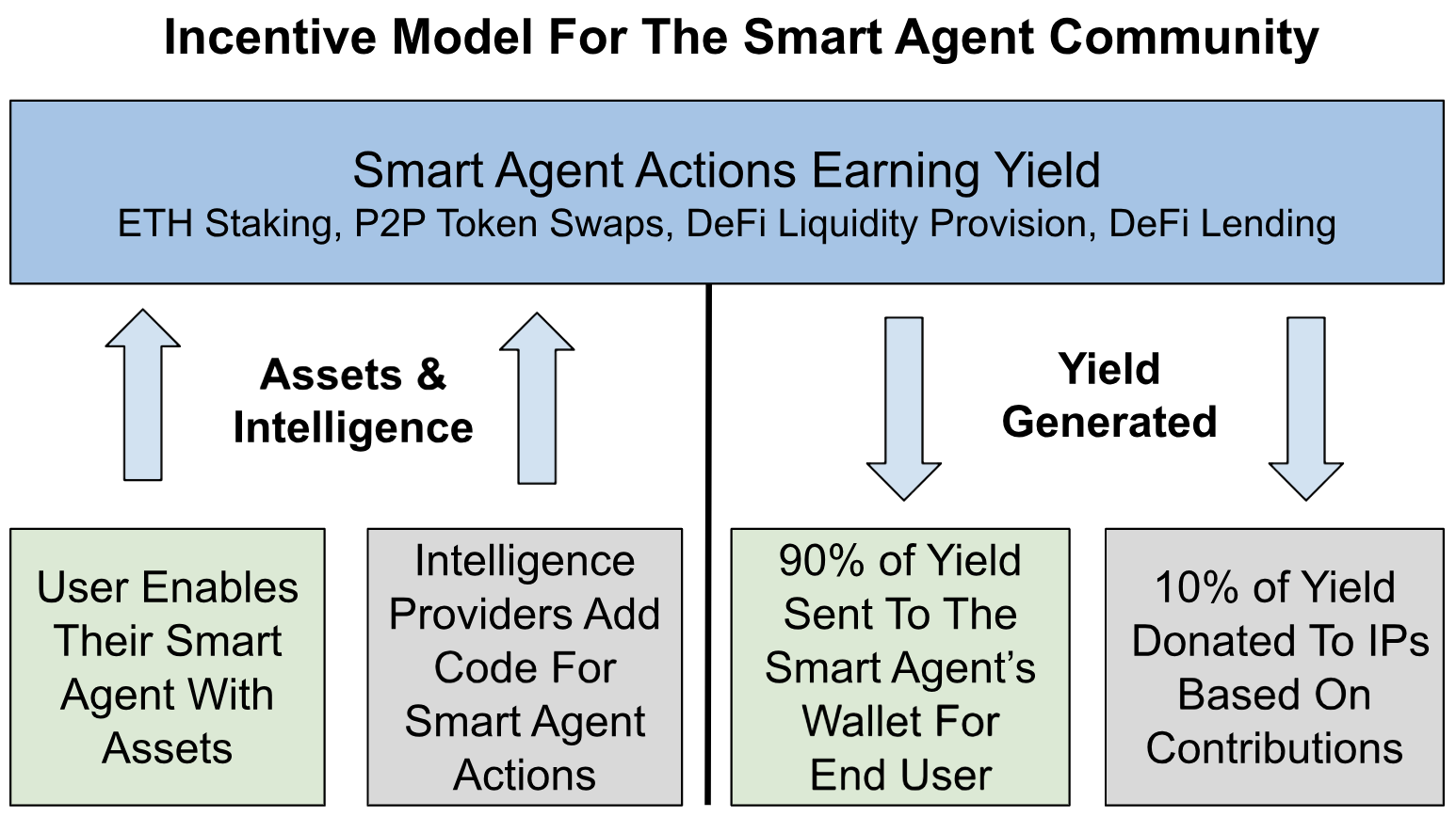

The token inflation structure highlights four major contributors to the protocol:

- Code - the agent builders.

- Community - builds the front-end applications and tools to bring new users to the ecosystem.

- Compute - provides the computational power to run the agents.

- Capital - offers up their yield in order to power the economic machine of Morpheus.

Each of these categories receive an equal cut of $MOR inflation rewards (a small amount is also saved as an emergency fund), compelling them to:

- Build the best agents - creators get paid out when their agents are used consistently. Instead of offering OpenAI plugins for free, this pays builders instantly.

- Build the best frontends/tools - creators get paid out when their creations are used consistently

- Provide steady computational power - providers paid when they lend compute

- Provide liquidity to the project - earn their share of MOR for keeping the project liquid

And while other AI/smart agents projects exist (a whole lot of them), Morpheus’ tokenomics structure stands out as the “cleanest” example of “show me the incentives and I’ll show you the outcome.”

These smart agents are the ultimate illustration of AI genuinely removing barriers for crypto applications. The user experience of dApps is notoriously bad (though there have been many advancements over the past several years) and the rise of LLMs has lit a fire under the ass of every wannabe founder in Web2 and Web3. And despite the flood of cash-grabs, standouts like Morpheus and Wayfinder (demo below) offer a glimpse into how simple it will one day become to transact onchain.

Putting it all together, the interplay between these systems could look a little bit like the following. Note that this is a laughably-simplistic view.

Separating the wheat from the chaff - how to know when a project isn’t complete bullshit

Remember our two broad categories of “Crypto x AI”:

- Crypto that helps AI

- AI that helps Crypto

Throughout this piece, we’ve mostly just explored #1. As we’ve seen, a well-designed token system can set an entire ecosystem up for success.

#1 - Crypto that helps AI

DePIN architectures can help jump-start marketplaces and creative token incentive structures can coordinate effort towards once-intangible goals for open-source projects. And, yes, there are several other legitimate intersections that I didn’t cover for the sake of brevity:

- Decentralized storage

- Trusted Execution Environments (TEE)

- RAG (pulling data in real time)

- Zero Knowledge x Machine Learning for verification of inference/provenance

When deciding if a new project really has something to offer, ask yourself:

- If it’s derivative of another established project, is it different enough to move the needle?

- Is it just a wrapped version of open-source software?

- Is it a problem that benefits from crypto rails, or is crypto being shoehorned in?

- Can there really be 100 different “crypto’s HuggingFaces”

#2 - AI that helps Crypto

Where I personally see more vaporware is in this second category. Again, some really cool use cases exist where AI models can remove barriers in crypto UX, especially, as mentioned, with smart agents. Here are a couple other interesting categories to watch in the world of AI-enabled crypto apps:

- Supercharged intents systems - automating cross-chain operations

- Wallet infrastructure

- Real-time alerting infrastructure for both users and applications

If it’s just a “chatbot with a token,” it’s bullshit to me. Please stop hyping these up for the sake of my sanity. Also:

- Adding AI will not miraculously give your dead app/chain/tool product-market fit

- No one is going to play a bad game just because it has AI characters

- Strapping “AI” to your project does not make it interesting

Thank you for coming to my TED talk.

Where we go from here

Despite all the noise, there are some serious teams working to bring the vision of “decentralized AI” to life, and that’s worth fighting for.

In addition to projects incentivizing open-source model development, decentralized data networks open a new door to upstart AI builders. When the vast majority of OpenAI’s competition can’t afford to make massive deals with Reddit, Tumblr, or WordPress, distributed scraping could even the odds.

A single company will likely never own more computational power than the rest of the world combined, and with decentralized GPU networks, this means that anyone else has the capability to match the top dogs. All you need is a crypto wallet.

We sit at a crossroads today. We have the tools necessary to decentralize the entire AI stack, if we only focus on “Crypto x AI” projects that are actually worth a shit.

Cryptocurrency was envisaged to create a hard money that no one could fuck with, through the power of cryptography. And just as this nascent tech is starting to catch on, a new, scarier challenger appeared.

Instead of holding the power to control just your finances, centralized AI, in the bluest-of-blue sky cases, imparts bias on every bit of data we encounter in our day-to-day lives. It is set to enrich a microcosm of a microcosm of tech leaders in a self-perpetuating loop of data gathering, fine tuning on an intimate level, and model-mainline-injection into every corner of your existence.

It will know you better than you know yourself. It will know which of your buttons to press to make you want to laugh more, to rage more, and to consume more. And as much as it appears, it does not answer to you.

As it was in the beginning, crypto is a resistive force to this nigh-eventuality of AI centralization. Its ability to coordinate efforts towards a goal of the commons is now competing against an even more formidable foe than central banks. And this time, we’re up against a clock.

Disclaimer: The author of this report, 563, head of research at blocmates, is very active in the field of crypto and AI. He’s very passionate about these topics, and thus, you can assume he has exposure to just about every project mentioned. He believes in putting your money where your mouth is.

The information provided is for general informational purposes only and does not constitute financial, investment, or legal advice. The content is based on sources believed to be reliable, but its accuracy, completeness, and timeliness cannot be guaranteed. Any reliance you place on the information in this document is at your own risk. On Chain Times may contain forward-looking statements that involve risks and uncertainties. Actual results may differ materially from those expressed or implied in such statements. The authors may or may not own positions in the assets or securities mentioned herein. They reserve the right to buy or sell any asset or security discussed at any time without notice. It is essential to consult with a qualified financial advisor or other professional to understand the risks and suitability of any investment decisions you may make. You are solely responsible for conducting your research and due diligence before making any investment choices. Past performance is not indicative of future results. The authors disclaim any liability for any direct, indirect, or consequential loss or damage arising from the use of this document or its content. By accessing On Chain Times, you agree to the terms of this disclaimer.